bit to nibble – How to convert bit to nib

Ever wonder how much information fits in a nibble? If you’re working with data at a low level — like microcontrollers or binary code — you’ve probably run into bits and maybe even nibbles. Converting between them is super simple, but understanding why this small unit matters makes the whole picture more interesting.

Let’s dig into how a single bit becomes a part of a nibble — and why that even matters today.

What exactly is a bit?

A bit (short for “binary digit”) is the smallest unit of digital information. It represents a state of either 0 or 1. Every piece of digital data you see — from this page you’re reading to your favorite song — is ultimately built from bits.

Bits are the building blocks of computing. They're used in everything from logic gates in processors to storage on a USB stick. Eight of them make up a byte, but before you get to a byte, you hit a halfway point: the nibble.

What is a nibble?

A nibble is equal to 4 bits. It's half of a byte. The term isn’t officially recognized in SI units, but it’s widely used in computer science, especially when discussing binary-coded decimals, hexadecimal numbers, and memory organization in low-level computing.

Each nibble can represent a number from 0 to 15 in decimal, or 0 to F in hexadecimal — making it the perfect chunk of data when working with hex systems, which are common in memory addressing and microcontroller programming.

You’ll often see nibbles used in:

-

Embedded systems

-

Processor registers

-

Compact data encoding

-

Instruction sets for older or custom architectures

How to convert bit to nibble

This one’s as easy as it gets in binary math.

We know:

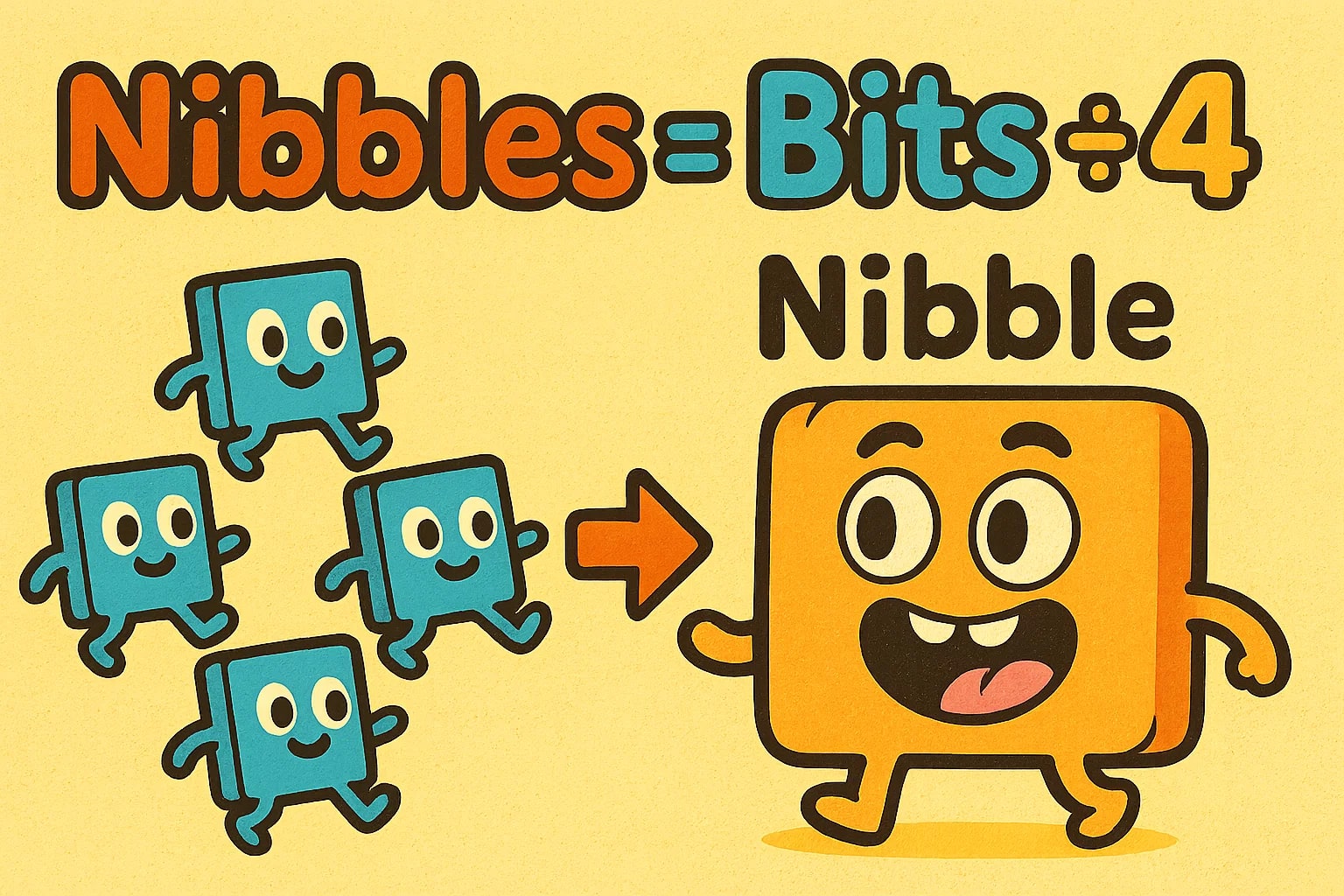

1 nibble = 4 bits

So the formula to convert from bit to nibble is:

nibbles = bits ÷ 4

✅ Example: Convert 16 bits to nibbles

Using the formula:

nibbles = 16 ÷ 4

nibbles = 4

So, 16 bits equals 4 nibbles.

Want to speed it up? Head over to the Data Storage Converter or explore all of Jetcalculator’s Conversion tools for more unit types.

Did you know?

-

The term “nibble” is a tech pun — it’s half a byte (like a small bite of data).

-

In hexadecimal, one nibble represents one digit. That’s why 2-digit hex codes (like 0xF1) represent exactly 1 byte: 2 nibbles.

-

Older CPUs, like those in early calculators or 4-bit microcontrollers, worked with single nibbles instead of full bytes.

-

BCD (Binary-Coded Decimal) stores each decimal digit in a nibble — that’s how some early banking systems stored financial values.

-

Nibbles are still useful in modern cryptography and data packing, especially in applications where space and simplicity matter.

When tiny units made big waves – Nibbles in early computing

Back in the 1970s and 80s, memory was expensive and chips were limited. Some early computers — like the Intel 4004, the world's first commercially available microprocessor — used 4-bit architecture. That meant every instruction, every piece of data, every operation worked in nibbles.

Programmers had to be incredibly efficient. A nibble could represent numbers 0–15, so everything from math operations to ASCII-like characters had to be creatively coded within those constraints. Systems like pocket calculators, early gaming consoles, and programmable logic controllers ran on this architecture.

Even today, 4-bit microcontrollers still exist. They’re not powering your phone, but they might be in your coffee maker, your thermostat, or even your car’s tire pressure sensors — places where a few nibbles are enough to get the job done.

So how many bits in a nibble again?

Just four. That’s the whole conversion.

nibbles = bits ÷ 4

It’s easy to overlook these small units in a world filled with gigabytes and terabytes. But when it comes to precision, speed, and compact design — nibbles still matter.

Try more conversions with our Data Storage Converter or explore Jetcalculator’s full set of Conversion tools — they’re built to keep your numbers simple and your results spot-on.