nibble to bit – How to convert nib to bit

A nibble might sound cute, but in the world of data, it packs a meaningful punch. It’s not just a fun term — it plays a key role in how computers handle small chunks of binary data, especially in hexadecimal systems. If you’re working on microcontrollers, embedded systems, or learning about memory layouts, converting nibbles to bits is one of the most basic — and useful — calculations you’ll make.

Let’s walk through what a nibble really is, how it compares to a bit, and how to switch between the two in a snap.

What is a nibble?

A nibble is a unit of digital information made up of 4 bits. It’s exactly half a byte. You’ll often see it used when dealing with hexadecimal numbers, because each hex digit represents one nibble (i.e., 4 bits).

This makes nibbles ideal in systems where compact and human-readable binary data is needed. You’ll run into them in:

-

Low-level memory addressing

-

Microprocessor registers

-

Hex dumps in debugging tools

-

Instruction sets and opcode formats

-

BCD (binary-coded decimal) representations

The term "nibble" (sometimes spelled "nybble") is a playful nod to its relationship with a byte — a full “bite” of data.

What is a bit?

A bit is the smallest possible unit of digital information. It’s binary: either 0 or 1, yes or no, off or on. Bits are used in every part of computing — they’re the base layer of everything from your calculator to the world’s fastest supercomputer.

All larger units — including bytes, kilobytes, megabytes, and yes, nibbles — are built by grouping bits together.

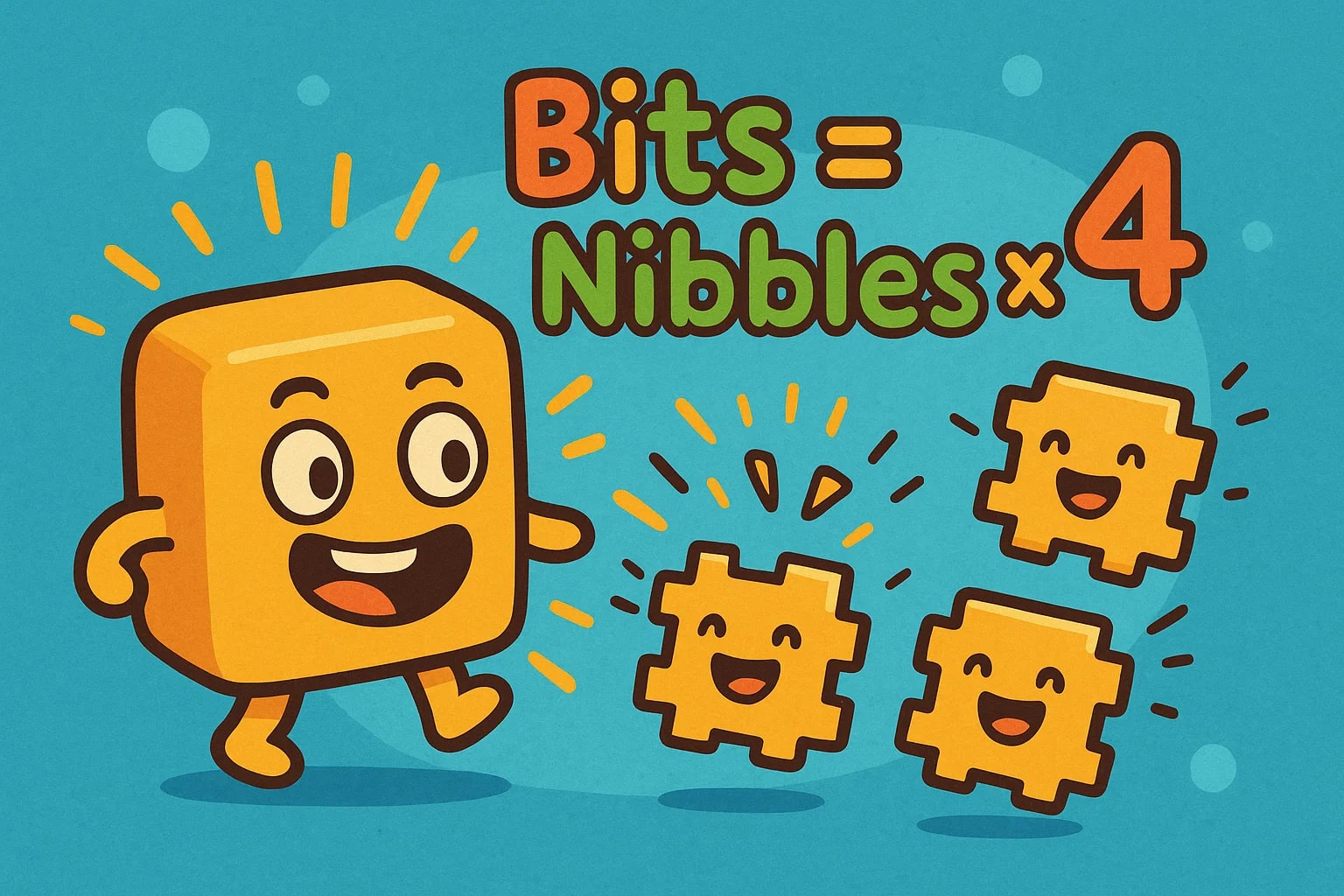

How to convert nibble to bit

This is one of the simplest conversions in computing.

1 nibble = 4 bits

So the formula is:

bits = nibbles × 4

✅ Example: Convert 6 nibbles to bits

bits = 6 × 4

bits = 24

So, 6 nibbles equals 24 bits.

For faster conversions across all storage units, check out our Data Storage Converter or explore the full suite of Conversion tools.

Did you know?

-

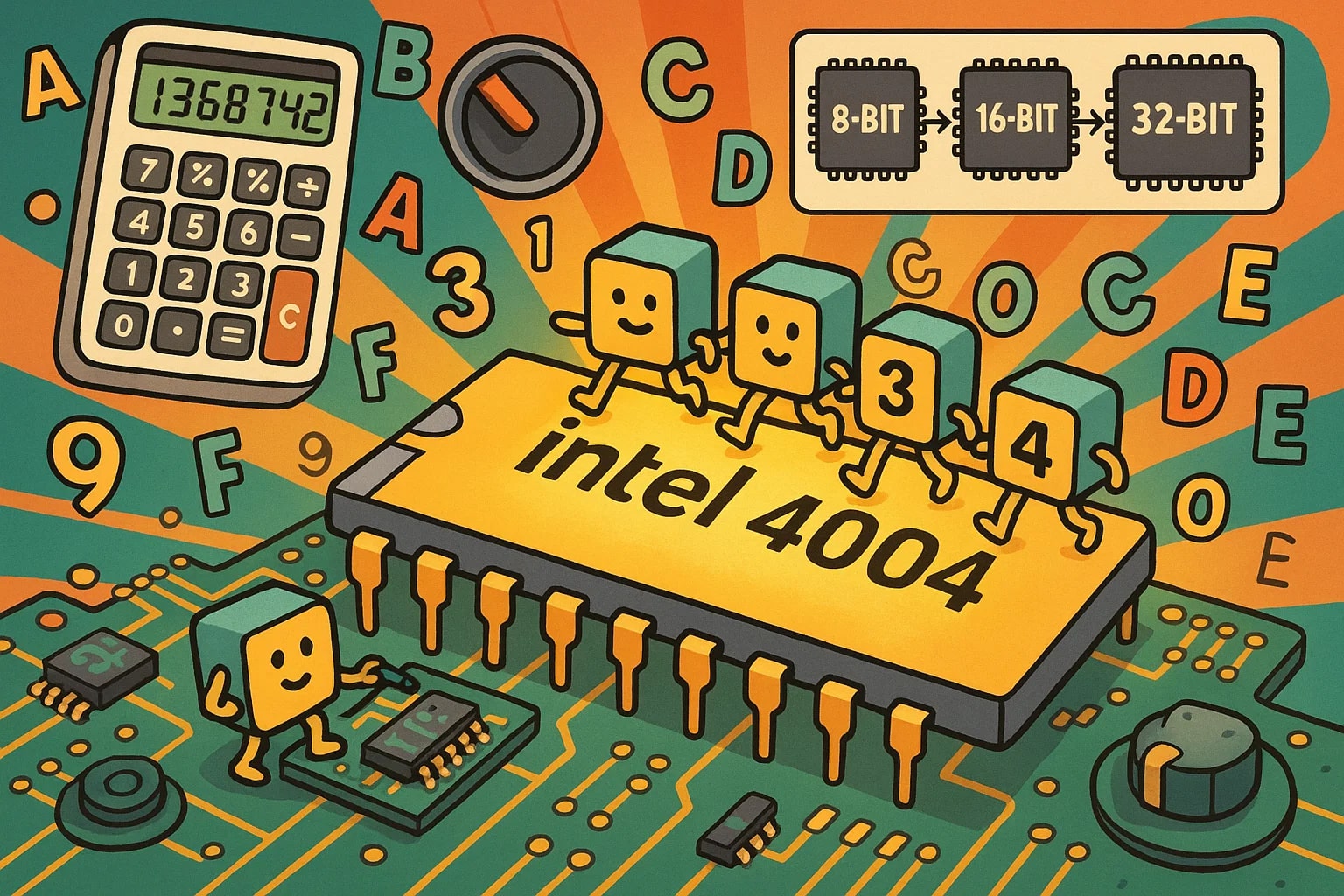

The first 4-bit microprocessors, like Intel’s 4004 in 1971, operated entirely on nibbles — it kicked off the modern microprocessor era.

-

In hexadecimal notation, one digit always equals one nibble, making hex ideal for representing binary in a readable format.

-

BCD (Binary-Coded Decimal) encodes each decimal digit as a nibble — it's still used in financial and industrial control systems today.

-

Some older calculators and embedded systems still use 4-bit architecture, where every operation is built on nibble-sized instructions.

-

Debugging tools often show memory in hex views — understanding nibbles helps interpret these readouts more easily and accurately.

A bite out of computing history – Where nibbles changed the game

Back in the early 1970s, computing was expensive, and space was limited — not just physically, but in terms of memory and data width. The release of Intel’s 4004 processor, the first commercially available microprocessor, brought the 4-bit architecture — meaning every register and operation was built on a single nibble.

It wasn’t just a clever term. It was a critical size for the time. Many early applications, like digital calculators and control circuits in appliances, only needed to work with simple numbers — often one decimal digit at a time. Since a nibble can hold values from 0 to 15, it was the perfect fit.

As computing evolved, the architecture scaled — to 8-bit, 16-bit, and beyond — but the nibble never fully disappeared. It’s still essential in hexadecimal representation, network packet design, and compact memory formatting — especially in environments where every bit counts.

Four bits, one nibble, total clarity

This conversion is as clean as it gets:

bits = nibbles × 4

Whether you’re reading a hex table, programming a microcontroller, or debugging a low-level memory dump, understanding how nibbles map to bits gives you sharper control over binary data.

Want to explore other conversions? Visit the Data Storage Converter or browse more specialized Conversion tools to keep your calculations crisp and fast.