bit to word – How to convert bit to word

When working close to the hardware, bits don’t just stand alone — they group up into words. If you’re programming, dealing with memory allocation, or reading processor documentation, you’ll probably run into this unit. So how do you go from a basic bit to a word? That depends on the system — but we’ll show you the standard way to convert and what it really means under the hood.

What is a bit?

A bit is the simplest building block in the digital world — a binary digit that can be 0 or 1. It’s the language of computers at the most fundamental level. Everything from a Google search to a spaceship's guidance system starts here.

Bits are what processors handle, storage saves, and networks transmit — whether they’re working with thousands or trillions of them per second.

What is a word (in computing)?

In computing, a word refers to a fixed-length group of bits that a processor can handle in one operation. The actual size of a word varies depending on the architecture of the system:

-

16-bit systems → 1 word = 16 bits

-

32-bit systems → 1 word = 32 bits

-

64-bit systems → 1 word = 64 bits

So the size of a “word” is not universal — it depends entirely on the context. However, if you’re converting and no specific system is stated, 32 bits per word is often used as a standard for general reference.

How to convert bit to word

Assuming a 32-bit word system:

1 word = 32 bits

So the formula is:

words = bits ÷ 32

If you're using a different architecture (like 16-bit or 64-bit), just replace 32 with the appropriate word size.

Example: Convert 256 bits to words (32-bit system)

words = 256 ÷ 32

words = 8

So, 256 bits equals 8 words (on a 32-bit system).

Need to switch word sizes? Use our Data Storage Converter or explore more Conversion tools for flexible calculations.

Did you know?

-

The “word” size is directly tied to a processor’s architecture — it affects how fast and efficiently data can be processed.

-

Early computers like the ENIAC used 10-digit decimal words, not binary — modern binary words evolved later.

-

Assembler programming languages rely on word-size definitions for instruction encoding, especially for operations like MOV, ADD, or LOAD.

-

In C programming, data types like int or long often match the system’s word size — which is why programs behave differently across 32-bit and 64-bit machines.

-

ARM processors, used in most smartphones, use variable word sizes depending on the instruction set — balancing efficiency with performance.

The word behind the machines – Why CPUs care about this unit

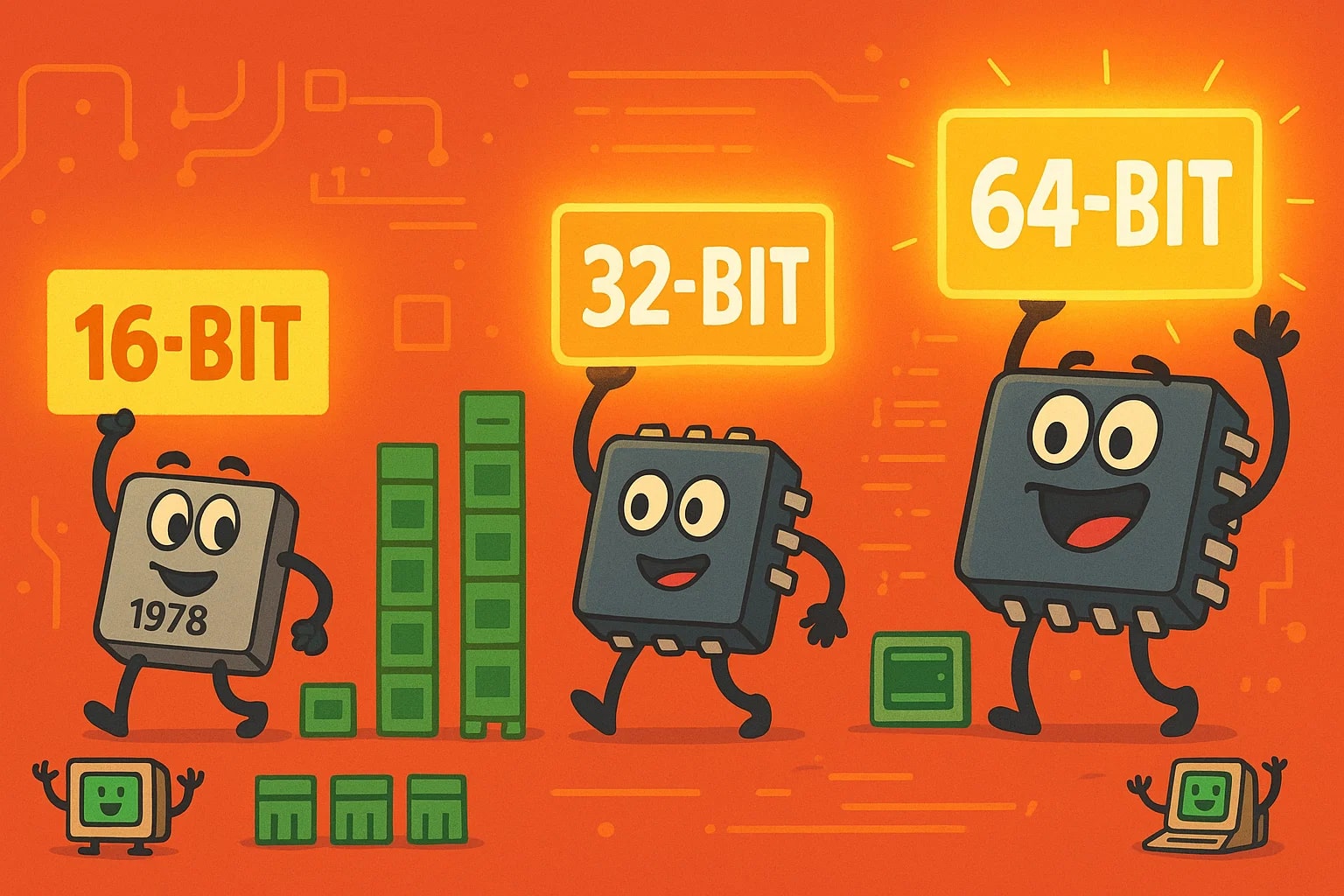

In 1978, Intel released the 8086 processor, which introduced the 16-bit word to the mainstream PC world. It was a major step forward — doubling the data width compared to 8-bit systems like the 8080. Suddenly, computers could process more data in a single operation, load larger instructions, and manage bigger memory spaces.

This idea of the word became a core part of how computer architecture evolved. From 16-bit to 32-bit and then to 64-bit systems, increasing the word size meant expanding the power of the processor. The word size affects everything — how much RAM a system can use, how fast it can run programs, and even how secure it is.

Even now, whether it’s a data center server or a Raspberry Pi, understanding how many bits fit into a word is key to writing efficient, low-level code.

Every instruction starts with a word

When you break things down, computing is just billions of bits — and those bits get grouped into words to be useful. Whether you’re writing firmware or just curious about how your machine runs code, converting bits to words helps clarify what’s happening inside the silicon.

Just remember:

words = bits ÷ word size

(Common default: ÷ 32)

If you’re working on a different architecture, adjust the formula accordingly — and use our Data Storage Converter or browse more Conversion tools to keep everything running smooth.